Discrete images and image transforms

Here I will talk about Images and the forms in which we manipulate it.

An image can be defined as a two-dimensional function , where and are spatial (plane) coordinates, and the amplitude of/at any pair of coordinates is called the intensity of the image at that point.

The term gray level is used often to refer to the intensity of monochrome images. Monochrome images contain just shades of a single colour.

Color images are formed by a combination of individual such images.

For example in the RGB colour system a color image consists of three individual monochrome images, referred to as the red (R), green (G), and blue (B) primary (or component) images. So many of the techniques developed for monochrome images can be extended to color images by processing the three component images individually.

A interesting thing that I came across was that the additive color space based on the RGB color, doesn’t actually cover all the colours, we used this model to fool the human eyes which have 3 types of cones which are (due to evolutionary reasons) sensitive to Red, Green and Blue light.

If we mixed Red and Green, it would look yellowish to us, but a spectrum detector would not be fooled.

Additive color is a result of the way the eye detects color, and is not a property of light.

Lets check out some basic MATLAB code to see how the 3 channels of red blue and green make up a colour image.

Images are read into the MATLAB environment using function imread,

we use % to make comments.

img = imread('lenna.png'); % img has a RGB color image

imtool(img)

with imshow command we can view the image,

using the imtool command we can see how each pixel has the 3 corresponding intensities, the variable img will also tell us how there are 512*512 pixels, and each pixel has 3 values.

As you can see each pixel has an intensity value for each of the 3 channels (RGB), we can use the following MATLAB code to see the 3 monochrome images which make up the original image.

red = img(:,:,1); % Red channel

green = img(:,:,2); % Green channel

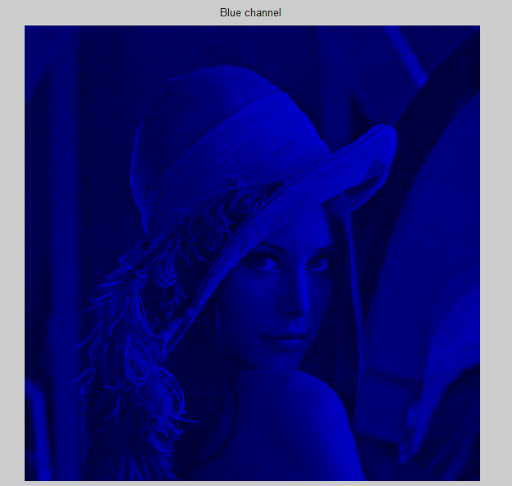

blue = img(:,:,3); % Blue channel

a = zeros(size(img, 1), size(img, 2));

just_red = cat(3, red, a, a);

just_green = cat(3, a, green, a);

just_blue = cat(3, a, a, blue);

back_to_original_img = cat(3, red, green, blue);

figure, imshow(img), title('Original image')

figure, imshow(just_red), title('Red channel')

figure, imshow(just_green), title('Green channel')

figure, imshow(just_blue), title('Blue channel')

figure, imshow(back_to_original_img), title('Back to original image')

Now with this code red, blue and green are 512*512 matrices, the 3 of which together was img. a is just a black image of same size, it’s used to make the grey monochrome images red, blue and green into shades of the colors red, blue and green respectively. The cat function concatenates arrays along specified dimension (the first argument can be 1,2,3… and its for concatenation along rows, columns and as an array of matrices in the case of 3 ) so when they use 3 they are doing the reverse of what they did in decomposing the original image which had three 512*512’s matrices.

But to really get an idea of much each color contributes to the final image we need to look at the 3 images in grey monochrome, just add these 3 lines of code,

figure, imshow(red), title('Red channel - grey monochrome')

figure, imshow(green), title('Green channel - grey monochrome')

figure, imshow(blue), title('Blue channel - grey monochrome')

and we get an image where the darker it is, the less the intensity value and vice versa.

Sampling and Quantization

The function we talked about above, when you consider a real life image is actually continuous with respect to the x- and y-coordinates, and also in amplitude. Converting such an image to digital form requires that the coordinates , as well as the amplitude , be digitized. Digitizing the coordinate values is called sampling; digitizing the amplitude values is called quantization. Thus, when x,y, and the amplitude values off are all finite, discrete quantities, we call the image a digital image.

I recommend Digital Image Processing Using MATLAB® by Ralph Gonzalez (Author), Richard Woods (Author), Steven Eddins (Author). Chapter 2 should bring anyone up to speed with using MATLAB in image processing.

Frequency Domain of Images

Now that we have seen how we store images in discrete form, when we come to transforming it.

From what was taught to me in class we went directly into playing with the image in the frequency domain. Spatial filtering comes later, so for now let me tell you about a idea which I really wanted to write about. How the frequency domain is so important.

First of all let me refresh your memory about vector spaces, I will be drawing an analogy between a vector and a image,

the key idea is that an image can be considered to be the linear combination of the basis images just like how a vector can also be considered to be linear combination of the basis vectors!

now lets the extend the analogy, consider the vector space consisting of vectors of the form

Similarly consider the set of all monochrome images, for simplicity’s sake let it be a square image with 9 pixels. we can represent such images using matrices of size , say with a value of 0 indicating black

1 indicating white and the values in between denoting a shade of grey.

Now that the foundations have been laid, lets ask ourselfs how exactly is the “image” being stored? well its stored in a matrix with values that indicate the varying shades of grey for each pixel. The point I am trying to make here is that the image data is distributed spatially, we basically take the entity known as a image and fragment it into multiple pieces known as pixels and then we encode the data in the form of intensity of the various pixels.

Now lets take this same thing into the vector space discussion, a vector (in at least) has 3 components, when we store it we separate it into the 3 integer values that make it up.

Similarly lets look at the standard basis of ,

so, yeah we can construct any vector in by a linear combination of the 3 Standard basis vectors given above..

But what does that mean? and why are these 3 standard when we can take any 3 orthogonal vectors to do this job!

See what this means is that these three Standard basis vectors point in the direction of the axes of a Cartesian coordinate system and when we represent a vector as a linear combination of these 3 vectors, then the scalars we get as coefficients to each the basis vectors represents the amount the vector contributes to the direction of the respective basis vector.

This has practical importance as when we have a vector which tells us say for example the velocity of a object, then it’s important to know what the component of that velocity along the three axises are, this is known as resolving into components.

So the point is when we choose a set of basis for our vector space what we are essentially doing is choosing in what way we want to represent the vectors themselves. We choose the standard basis vectors in the case of the vector space because the information we get (when we break any vector in that space into a linear combination of these basis vectors) is the coefficients of these basis vectors, these scalar values encode the information about the vector in a way that is useful to us!

Whew that was awesome right?!

Now comes the Image’s frequency domain part, this is not as straightforward as the vector space.

So the matrix we talked about is used to encode the image discretely in the spatial domain, but when we talk about the frequency domain what do we mean? And also what are the basis images we use in the monochrome images vector space?

Okay to answer these questions let’s think about natural images, instead of defining the shade of each and every pixel in the image what could be a better way of thinking of/encoding a image.

well the idea that DCT uses is that in normal natural images we will have portions of slowly varying pixels (The sky, ocean, skin etc). Because the pixels of a region are similar in intensity these pixels are said to be correlated, this sort of correlation makes the spatial domain method of storing the image kind of redundant right? we should stop looking at an image as a collection of pixels, instead think of a image as a literal weighted superposition of a set of basis images, where each of the basis images will be orthogonal to each other. The weights used in this linear combination will signify the amount of contribution each of the basis image makes towards the final image.

To illustrate this let’s consider a small image, with 64 pixels, a square matrix could represent it. To see it clearly we zoom in 10x times.

Now there are various transforms such as DCT, DFT, Hadamard, K-L etc

each of these provide us with a different way to arrive at the basis images.

Each of them have their own pro’s and con’s, For now let me show you the basis images used in the DCT transform.

If you notice you can see how there are 64 basis images, also towards the top left corner the images are smoothly varying while as we go towards the bottom left corner we can see that the image is rapidly varying. Thus when we find the scalar value corresponding to each of these basis image such that the linear combination is the small image, then the low frequency coefficients are the coefficients of the smoothly varying images, while the high frequency coefficients are that of the rapidly varying basis images in the bottom right corner.

Thus the array of these coefficients are known as the DCT of the image and it encodes the image as the weighted superposition of a set of known basis images. For the image given above the DCT would be

Superimposing the coefficients over the basis images we get a proper idea of how much each basis image contributes to the final image.

Thus to get the image back we do the weighted sum, as shown below

The image on the left is seen to grow closer and finally become the actual image, which is actually a pretty blurred A. The image on the middle is the product of the coefficient and the corresponding basis image (shown on the right).

So now I hope you understand what we mean by the frequency domain, these coefficients are the representation of the image in the frequency domain, and the coefficient matrix is the transformed image itself.

We saw the basis images used in the DCT transform, here as our objective was to segregate the image in terms of its smoothly varying and sharply changing patterns we used these basis states. Thus the key point I wanted to share was that these basis states are chosen out of a wish to look at the image from a particular perspective.

Just as how we used the standard basis when we wanted the vectors in terms of their components in the axes, we choose basis states for images because we want to want to understand it terms of some idea.

In brief the idea which motivates DCT is smoothness,

DFT is periodicity in the image,

Walsh–Hadamard transform is also about periodicity but has a computational edge over DFT as it doesn’t require multiplication, (only addition and subtraction needed) when computing it.

But the idea behind KL transform is brilliant and I think I’ll cover that in detail on my post on the Image Transforms.

Well as usual, any and ALL feedback is welcome.

Many thanks to,

Digital Image Processing Using Matlab,

Ralph Gonzalez, Richard Woods, Steven EddinsHanakus

Written with StackEdit.

The way you broke down the concept of discrete representations of images and their transformation processes really helped clarify a complex topic. how-to-download-pc-game-free-vpnhow-to-download-pc-game-free-vpnI found the examples you provided, such as [specific example mentioned], particularly illuminating. It's evident that a solid understanding of these fundamentals is essential for anyone delving into image processing

ReplyDelete